Hope you are following us and have installed Python and Anaconda in your systems, if not please refer here and install it before proceeding further.

If you have some system restrictions, then you can log in to Google Colab for free and start working there. It is very similar to Jupyter notebooks, which we will be using throughout our training.

Note:- You can download all the notebooks used in this example here

Installations

The first step is to install the NLTK library and the NLTK data.

1. Install NLTK using pip command

pip install nltk

|

| installing nltk |

Since it is already installed in my system, it's showing "requirement already satisfied".

Instead of using Jupyter Notebook we can also create a virtual env in our system and follow these steps in conda/ python prompt.

2. Download NLTK data

nltk.download()

It basically contains all the data and other packages for nltk, so we will blindly select "all" for now and proceed further.

Great, We are now set to experiment with nltk now.

Basic Commands

For these examples, we would be importing nltk book (refer to citations for details)

- Importing nltk book

|

| nltk book import |

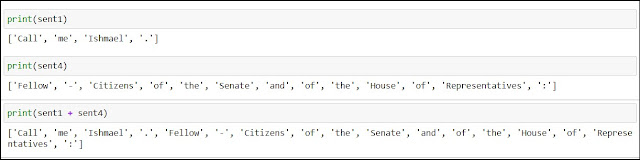

Here, we have basically everything from the nltk book module. This will import some texts and sentences from the NLTK book. It has two formats list of words as 'sent' and a text as 'text'.

We can view the texts and sentences present in them directly by using sent# or text# as shown above in the image.

The first basic action that we want to perform on any text is to search a tokens or N-grams in our text. To simplify our work of manually searching for a token, nltk provides us with a function known as "nltk.concordance" which will not only search the given token but will also provide the complete sentence where it has been used, as shown below:- Here, we can see within no time it was able to read the entire text and find around 350 occurrences of the word "World".

Next, we might want to search for different tokens with a similar meaning as the tokens we searched or thinking of. This can be done easily by using the function "nltk.similar" as shown below Here, we can see it was able to find sentences with words such as "earth" or "country union" which is similar to the original word "world".

The next thing that we might want to know about our text data is in what context a group of words have been used in our text. Let's say we found out the most similar word to our original word ("world") is the word "Earth", now we might want to know in what context are these words used for that we can use the function "nltk.common_contexts()" |

- Size

We searched our data and found similar words in it, but do we know the size of the text that we are dealing with..!! No worries it is as simple as "len()" in Python. It will return the number of tokens present in the text.

|

| length of text |

How about knowing the distinct tokens present in the text? It is again a simple step.. using a "set()" to create a set of distinct tokens and counting its size, as shown below

|

| length of distinct tokens |

How about knowing the occurrences of a particular word/token in the text... We can simply use a 'count()' function to count them.

NLP Repository

Comments

Post a Comment