End of Tail Imputation is another important Imputation technique. This technique was developed as an enhancement or to overcome the problems in the Arbitrary value Imputation technique. In the Arbitrary values Imputation method the biggest problem was selecting the arbitrary value to impute for a variable. Thus, making it hard for the user to select the value and in the case of a large dataset, it became more difficult to select the arbitrary value every time and for every variable/column.

So, to overcome the problem of selecting the arbitrary value every time, End of Tail Imputation was introduced where the arbitrary value is automatically selecting arbitrary values from the variable distributions.

Now the question comes How do we select the values? & How to Impute the End value?

There is a simple rule to select the value given below:-

- In the case of normal distribution of the variable, we can use Mean plus/minus 3 times the standard deviation.

- In the case variable is skewed, we can use Inter Quartile Range(IQR) proximity rule.

- We can also select & multiply the min/max value by a certain constant value such as 2 or 3.

Now let's have a look at the assumptions that we need to keep in mind and the limitations of this technique, post that we will be getting our hands dirty with some code.

Assumptions

Another point that we need to keep in mind before performing this Imputation is that we must divide beforehand and select the value for imputation from the train set only. This selected value must be used for imputation in both train and test sets then.

Another key point to note here is that as we talked about using Mean, Min/Max value, so by default this method can be used only for Numerical variables.

Advantages

Now let's have a look what are the advantages of this technique:-

- It is easy to implement.

- We can get the complete dataset very quickly.

- We can use this technique in Production.

- This technique can capture the importance of "missingness" if it exists.

Disadvantages

It also has few limitations attached to it:-

- It can lead to distortion in the original variable distribution.

- It can also distort the original variance of the dataset.

- The covariance will also be distorted with the remaining variables of the dataset.

- It can only be used for Numerical variables.

- True outliers may be masked in the distribution.

Code

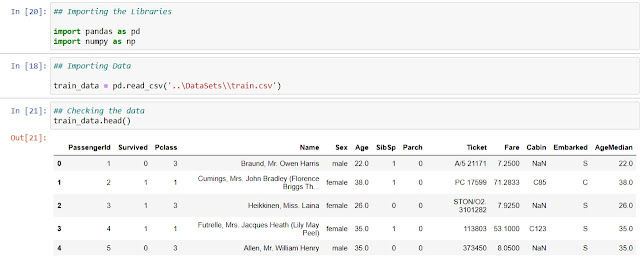

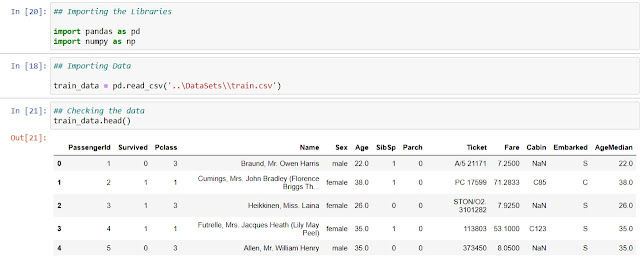

1. Importing the Libraries, Data and checking the data.

|

| Importing the Libraries and data |

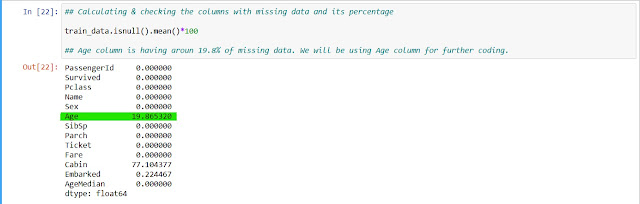

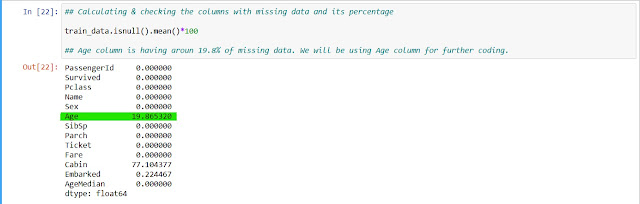

2. Checking percentage missing values in the data frame.

|

| Checking percentage missing values in the data frame. |

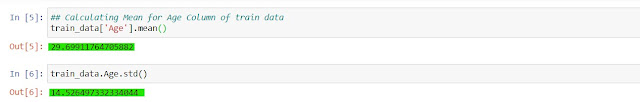

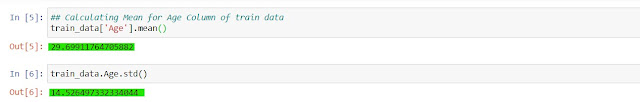

3. Calculating Mean and Standard Deviation

|

| Calculating Mean and Standard Deviation |

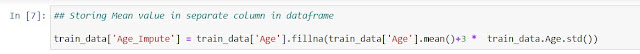

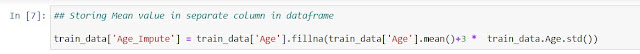

4. Performing Imputation

|

| Performing Imputation |

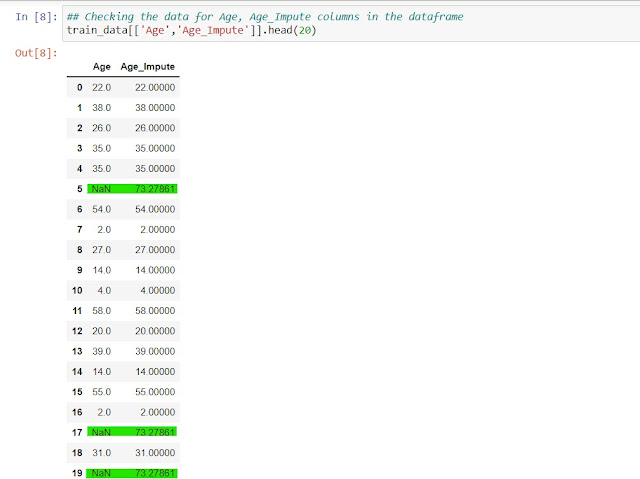

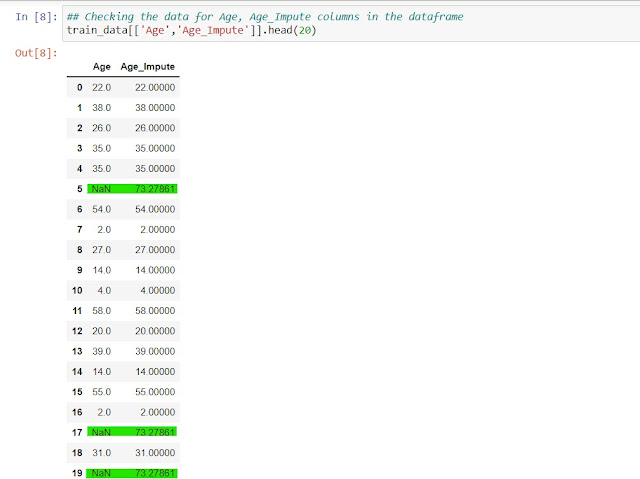

5. Checking the data for Age & Age_Impute columns in the data frame

|

| Checking the data for Age & Age_Impute columns in the data frame |

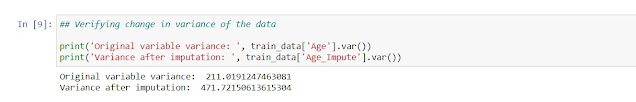

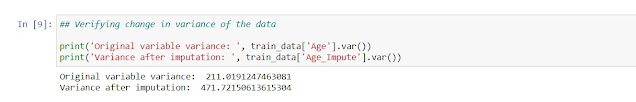

6. Verifying change of variance of the data

|

| Verifying change of variance of the data |

Thus, we can see the variance has changed significantly for the imputed column. So, we should pay extra caution should be taken while selecting the Imputation technique.

There are many Python libraries also which we can use and perform the End of Tail Imputation directly in a single line of code. We will be covering that part in a separate article.

Summary

In this Quick Note, we studied a famous Imputation Technique, i.e. End of Tail Imputation. We looked at the assumptions, advantages and disadvantages of the method and also basic coding in python to achieve End of Tail Imputation.

That's all from Here. Until Then... This is Quick Data Science Team signing off.

Comment below to get the complete notebook and dataset.

Comments

Post a Comment