Introduction

A Decision Tree is a flowchart-like structure in which each internal node represents a condition on an attribute with binary outputs(e.g. Head or Tail in a coin flip), it has node and branches, where the node represents the condition and branches represents the outcome.

These decision trees are very helpful in predicting the binary outcomes of an action. These decision trees can be used not only for building predictive models but also in Imputation, Encoding etc.

In the Case of Variable Encoding, the variables are encoded based on the predictions of the Decision Tree.

A single feature & the target variable is used to fit a decision tree, then the values of original datasets are replaced with the predictions from the Decision tree.

Some Important Points

While we go ahead and perform Categorical Variable Encoding using Count Frequency Encoding, there are a few points that one should keep in mind:-

Before using this technique, we need to divide the dataset into train and test sets.

- Train this technique only over the train set.

- Using this trained model, encode the values from both train and test sets.

- This technique can be used for both Numerical and Categorical fields.

- In case, if some values are missing in the train set at the time of training the model and encountered in the test set, it will give an error for such values.

Advantages

- This technique is quite simple to implement.

- It does not expand the feature space.

- Creates a monotonic relation between variable and target.

- Due to monotonic relation best suitable for Linear Models.

Disadvantages

- This technique can be used only for categorical variables.

- Prone to cause over-fitting

- Difficult to cross-validate with current libraries.

Practical

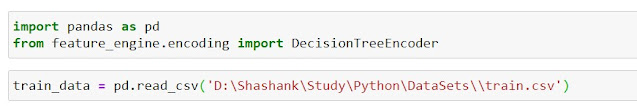

1. Importing the Libraries

|

| Importing Decision Tree Encoder, libraries and data |

2. Data Cleaning & View

|

| Data Cleaning & View |

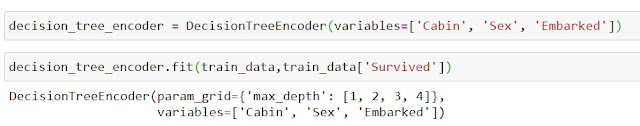

3. Initializing the Decision Tree Encoder

|

| Initializing the Decision Tree Encoder |

4. Transforming using Decision Tree Encoder

|

| Transforming using Decision Tree Encoder |

5. Verifying Data

|

| Decision Tree Encoding Result |

Comments

Post a Comment