Introduction

One of the most famous, most talked and most common methods when it comes to categorical variable encoding is "One Hot Encoding". We all have seen or heard this method somewhere in our DS journey till now. Also, often this method is shown in many Data Science or Machine Learning videos.

So, what makes this technique so special that everyone likes it..!!!

One Hot Encoding is defined as encoding each categorical variable with a different binary variable, i.e. 1 & 0 only. Such that the value 1 is used to represent if the value is present and 0 to represent if it's missing. Here, the number of distinct values in the variables that many new columns are added to indicate if that value is present or not.

Let's have an example to understand it better

|

| Dummy One Hot Encoding |

Some Important Points

While we go ahead and perform Categorical Variable Encoding using One Hot Encoding, there are a few points that one should keep in mind:-

- Before using this technique, we need to divide the dataset into train and test sets.

- Train this technique only over the train set.

- Using this trained model, encode the values from both train and test sets.

- This technique can be used for both Numerical and Categorical fields.

- In case, if some values are missing in the train set at the time of training the model and encountered in the test set, it will give an error for such values.

Advantages

- Easy & Straightforward to implement.

- Does not make any assumption about distribution or categories of the categorical variable.

- All the information about the categorical variables is kept intact.

- Best suitable for linear models.

Disadvantages

- It expands the feature space.

- No extra information is added while encoding.

- Many dummy variables may be identical, introducing redundant information.

A Hidden Concept

Practical

1. Importing the Libraries

|

| Importing One Hot Encoder, libraries and data |

2. Viewing the Data

|

| Dataset Preview |

3. Initializing the One Hot Encoder

|

| Initializing One Hot Encoder |

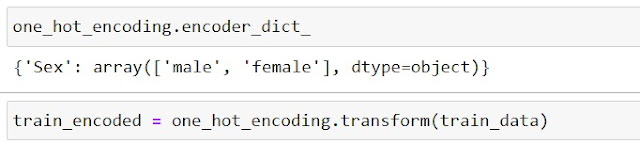

4. Transforming using One Hot Encoder

|

| One Hot Encoder transform |

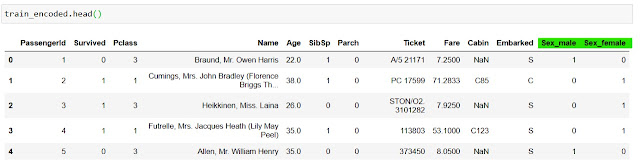

5. Verifying Data

|

| One Hot Encoded 'Sex' Variable |

Comments

Post a Comment