Talking about Multi-variate Imputation, one of the techniques that are very common and familiar to every data scientist is the KNN Impute. Though KNN Impute might be a new term, KNN is not a new term and is familiar to everyone related to this field. Even if it is a new term for you, don't worry we have defined it for you in the next section.

Let's define the KNN and make it familiar to the new aspirants.

What is KNN?

KNN, a short form of K-Nearest Neighbour, is a way of grouping the data points in 'K' groups based on their distance from the centre of the group.

Let's explain it with an example, suppose we have mixed(4 colours) some Lego pieces and want to segregate them into 4 groups based on colour (K=4). So, to start we randomly take 4 pieces of different colours and place them randomly on the floor(let's call it centroid). Now, once we have done this, the next task is to start distributing the remaining pieces to the 4 centroids based on the nearest colour match. Once we are done with this, we have successfully segregated our Lego pieces.

This process that we did in the above example is how a KNN is performed. We can vary the value K as per our need. But always such value should be selected that it reduces the groups and clearly distinguishes them.

*Note:- This is not the actual way how computers perform KNN, for them, they randomly guess centroid(4 different colours Lego) from the entire data space to start, and then it calculates the distance of point with these centroids and shifts the centroid to minimise the distance and group the data.

This is a very common Machine learning algorithm when we want to cluster things out from a heap.

We can read more about KNN from HERE

KNN Imputation

Now, we may wonder if the algo KNN is used for grouping/segregating the data, How it can be used for imputation?

Relax, it's quite simple just like we grouped our Lego based on colour(1 variable), similarly in the case of missing data imputation, the KNN algorithm is used to group the data based on a variable and predict the values for missing observations based on the nearest neighbours.

We can easily perform KNN Imputation using the KNNimputer from the skLearn library of python. It uses the euclidean distance to find the nearest neighbours, and then the values from neighbours are averaged uniformly or weighted by distance for imputation. In case an observation has multiple missing values, then the neighbours for imputing each variable may/will be different. When the number of available neighbours is less than n_neighbors and there are no defined distances to the training set, the training set average for that feature is used during imputation.

Time to flex our hands and see how we can practically achieve this.

Code

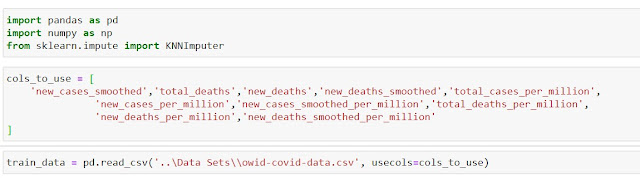

1. Importing the libraries and data

|

| Importing the libraries and data |

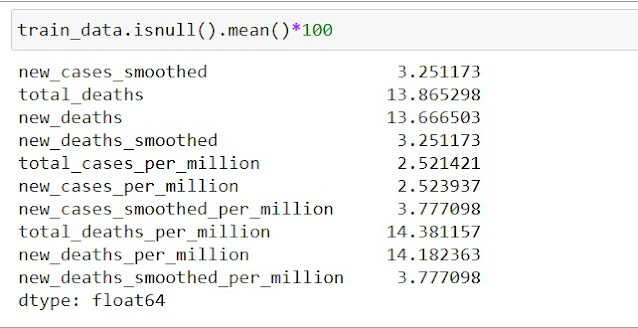

2. Checking Missing Data

|

| Percentage of missing data |

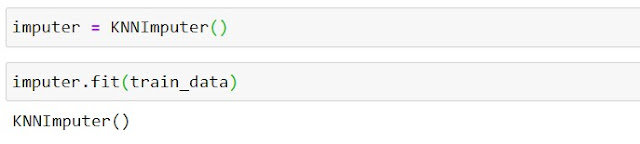

3. Loading KNN Imputer

|

| Using KNN Imputer |

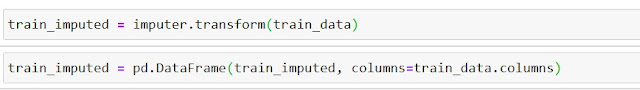

4. Transforming Data -- KNN Imputation

|

| KNN Imputation |

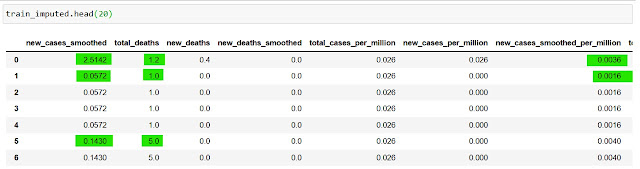

5. Verifying the Imputation

|

| Verifying the Imputation |

6. Parameters in KNNImputer

While initializing the KNNImputer, we are provided with many parameters that we can use to change the behaviour of our imputer. In our example we used a default imputer, now let's dive and see what all parameters are present for us.

A. missing_values:- It is the placeholder for missing values. It can take following values 'Int','float', 'str','np.nan' OR 'None'. By default, if we do not specify any value it takes np.nan

B. n_neighbors:- It specifies the number of neighbouring samples to be used for Imputation. By default, it takes 5 neighbours.

C. weights:- It is used to define the weight function that we want to use for predicting the values. By default, it uses 'uniform'. It can take the following values --

i. 'uniform’: All neighbouring points are treated uniformly for prediction.

ii. ‘distance’: It is used when we want to use an inverse relation based on the distance of neighbouring points. The closer the neighbour, the higher the influence and vice versa.

iii. callable: it used in case we want to define our own set of distances. It takes an array of distances and returns an array of weights of the same size.

D. metric:- the metric to be used for searching neighbours. It can take 2 values 'nan_euclidean' OR 'callable' -- user-defined values. By default, 'nan_euclidean' is used.

E. copy:- True or False, is used to specify if we want to create a copy of our dataset and then perform Imputation or not. Default - False.

F. add_indicator:- True or False, used to add a missing indicator for the observation having missing data or not. Default - False.

Limitation

Advantages

1. Although the process can be time taking, it provides a more accurate imputation.

Summary

In this article, we studied the Definition and practical implementation of KNN Imputation.

Thanks Jamie for the appreciations. We are having the related topics in our pipeline and will be publishing soon.

ReplyDeleteOspirinAtione Christina Love https://wakelet.com/wake/J2RlVPEFmxMPaCtnOM0_y

ReplyDeletegenkahoge

I read your blog. It's very nice and very helpful. I learn something new every time from this website. Thanks for sharing this information with us.You can visit our services here

ReplyDeleteProduct Engineering Services Company

App Modernization Services

Enterprise App Development Services

Mobile App Development Services

Low Code Development Services

Mendix Development Services

Cloud Migration Services

Digital Assurance Services

Software testing services

Thanks Allen, loved to hear that you like our blog and a regular reader.

DeleteJust checked your services and have to say they are quite good, looking forward to collaborate sometime soon.

itsubOtritho_1979 Vicky Mirda https://www.visualged.co.uk/profile/edwaldoshaylahshaylah/profile

ReplyDeleteroochesnibbchi

Thanks

DeleteI read your blog. It's very nice and very helpful. I learn something new every time from this website. Thanks for sharing this information with us.You can visit our services here

ReplyDeleteProduct Engineering Services Company

App Modernization Services

Enterprise App Development Services

Mobile App Development Services

Low Code Development Services

Mendix Development Services

Cloud Migration Services

Digital Assurance Services

Software testing services

Thanks Allen, loved to hear that you like our blog and a regular reader. Just checked your services and have to say they are quite good, looking forward to collaborate sometime soon.

Deleterierenoade Wewere Tucson program

ReplyDeleteClick here

diotrucuntab