Today, we are dealing with a big problem of Big Data, where a huge amount of data is generated every second and minute. Thus, the issue of storing such a huge amount of data arises, which is managed using various SQL, NoSQL and now NewSQL databases. But still, a problem remains if we store the data as it is generated in our databases, it gets difficult to query such huge data. Thus, there was a need for some technique that could help in splitting the data at the time of storing, providing not only fast and easy access to data but also in easy storage.

To cater for the issue of storing and managing Big Data, Hive was introduced, which further provides concepts like Partitioning and Bucketing to solve the issue of storing and querying huge datasets.

What is Bucketing?

Bucketing in Hive is dividing the data into smaller parts, which are easy to manage. It is used with or without partitioning in the Hive table.

Now, we may doubt if both Bucketing & Partitioning are used to divide the data into smaller parts, then what is the need for the two concepts and How do they differ?

We will be covering Bucketing VS Partitioning in a separate article. I would like to give a small example to provide a clear and separate picture of both.

Example:-

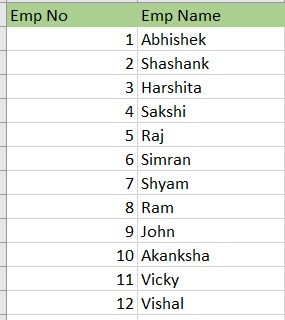

Suppose we have a dataset of 100 people, 1person for each age group, i.e. for age 1 we have 1 person, for age 50 we have 1 person and so on... Now, if we go by the concept of Partitioning then we will be having 100 partitions with 1 row of data. This is not feasible as there are too many partitions with too little data. Now, if we go by the concept of Bucketing, we need to define how many buckets/parts do we need. Suppose we decide 4 buckets in our case then hive will divide the data into 4 parts/buckets of size 25 each (0-24, 25-49, 50-74, 75-100), i.e. having 25 rows of data in each bucket/part.The later way of dividing the data sounds reasonable, as we have limited the number of parts and have also reduced the size of data.

Need of Bucketing

To understand Why the Need for Bucketing was created, we need to have a look at the limitations of Partitioning in Hive:-

- Partitioning is useful if we have a limited number of partitions. i.e. like in the above example, we are having 100 partitions, which is not feasible and also not preferred.

- Data in Hive Partitions should be approximately equal in size. i.e. Suppose we use partitioning of population, based on countries, then the partitions that are created would be highly unbalanced as the majority of the population is from India and China alone.

- Partitions are created based on the distinct values of the column. i.e. if we use partitioning over the "Gender" column, then by default 3 partitions would be created namely 'Male', 'Female' & 'Others'. Thus, we do not have control over the number of partitions.

Features of Bucketing

- We can use bucketing with or without the Partitioning table. i.e. We can either Bucket the world population based on countries directly, or we can Partition the data based on Gender and then bucket based on Age.

- Bucketing uses a hash_function, to decide the values in each bucket. i.e. A hash_function is used to calculate the values for user-defined buckets.

- The records having the same bucketed column, will always be stored in the same bucket.

- Bucketing focuses on creating almost equivalent data parts/buckets.

- CLUSTERED BY clause is used to divide the tables into buckets.

Advantages

- Bucketed table/data offers better efficient sampling as compared to non-bucketed tables.

- It provides us with a flexible option to keep the record sorted in each bucket by one or more columns.

- Join of each bucket becomes an efficient merge-sort.

- Due to merge-sort, map-side joins perform faster on bucketed tables than the non-bucketed tables.

- Query responses are faster for Bucketed tables than non-bucketed tables.

Limitations

- Bucketing doesn't ensure if the tables are populated properly or not.

- We need to manage data loading in buckets by our-self.

Comments

Post a Comment