One of the most favourite processes in Data Science is using Tree-based algorithms to find & predict the values. Trees are so popular in this field because they work over binary answers {Yes: No, 1: 0, True: False}, and when we can provide a clear difference between Yes or No, it becomes easy to better analyse things.

Thus, in the field of Data, when we have way too much inflow of data, it is always preferred to get cut to point answers to the questions.

So, why not use the same technique with Discretisation...!!!

How to use Decision Tree?

To find the optimal bins for the data Decision Tree creates a tree. Just like any other Decision Tree, here also a tree is created which is used to assign the values to one of the 'N' end leaves, thus segregating them to 'N' different bins.

A quick way to remember How to use this technique:-

1) Train a decision tree of limited depth (2, 3 or 4) using the variable we want to discretise and the target.

2) Replace the values with the output returned by the tree.

This process is quite useful as it does not focus on creating bins based on the widths or heights of the observation rather focuses on creating a more logical group/bins.

Let's analyse more the advantages and disadvantages of using this technique.

Advantages

- The output returned by the decision tree is monotonically related to the target.

- The tree end nodes or bins in the discretised variable show decreased entropy: that is, the observations within each bin are more similar among themselves than to those of other bins.

Disadvantages

- Prone over-fitting, Since, there is no perfect way to find out exact no. of bins, higher bins can lead to Over-fitting.

- Time Consuming. Since, some tuning of the tree parameters is needed to obtain the optimal number of splits (e.g., tree depth, the minimum number of samples in one partition, the maximum number of partitions, and a minimum information gain).

Practical

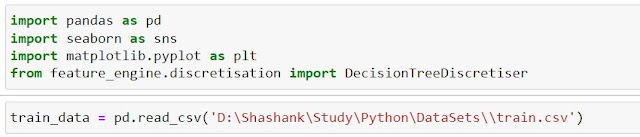

1. Importing the Libraries

|

| Importing Decision Tree Discretiser, libraries and data |

2. Data Visualization

|

| Data Visuals before Binning |

3. Cleaning the data

|

| Cleaning Data |

4. Using the Decision Tree Discretiser

|

| Initializing the Decision Tree Discretiser |

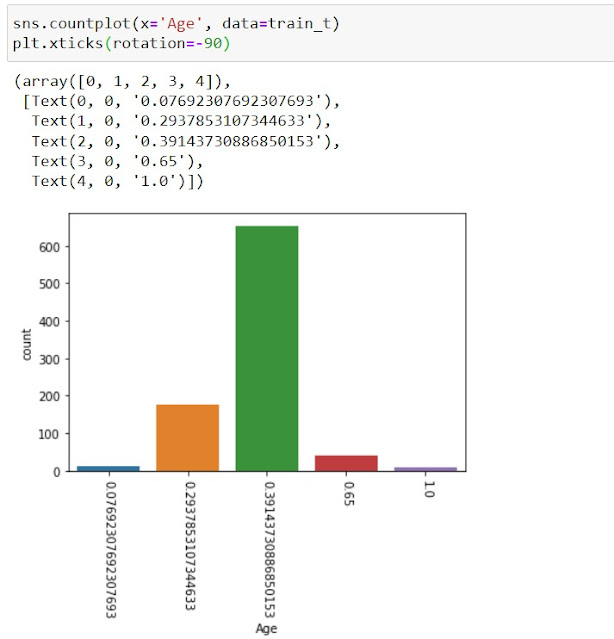

5. Visualizing the End Result

|

| Decision Tree Discretisation Result |

The Decision tree has grouped all the data into 5 bins, reflecting the original shape of graph, with high in middle and lower at ends.

Comments

Post a Comment